We’re in an exciting age of AI. New tools, models, and agents seem to be cropping up daily. Businesses are automating like never before and becoming more efficient across the board. I’ve made a habit of asking every business owner I speak with what they’re doing with AI and honestly, with how fast things are moving, it’s getting harder and harder for anyone to claim “expert” status.

At Moonward, we’ve taken a deliberate approach: AI-led from the top down. Everything we do starts with the question: “How can AI help us with this process?”

If we spot a task that’s repeatedly manual, we investigate how we can reduce or eliminate the effort entirely. A recent win for us was rolling out an AI-led code review tool that’s saved our team tens of hours every week.

Previously, every single piece of code written by our team had to be manually reviewed by a senior developer. Peer reviews like that require serious time, focus, and people power. So we built and deployed a leading AI-powered review tool that scans every code submission for vulnerabilities, bugs, logic flaws, and bad practices all based on company-set rules and standards.

The result? Code still gets a human review, but the time spent is drastically reduced. We’ve eliminated human error, maximised our review coverage and enabled our human reviews to focus on the most important aspects of the codebase. This is just one small example of how we’ve embedded AI into our workflow.

And if you’re reading this, I’m guessing you’re exploring similar opportunities in your business but with one major concern:

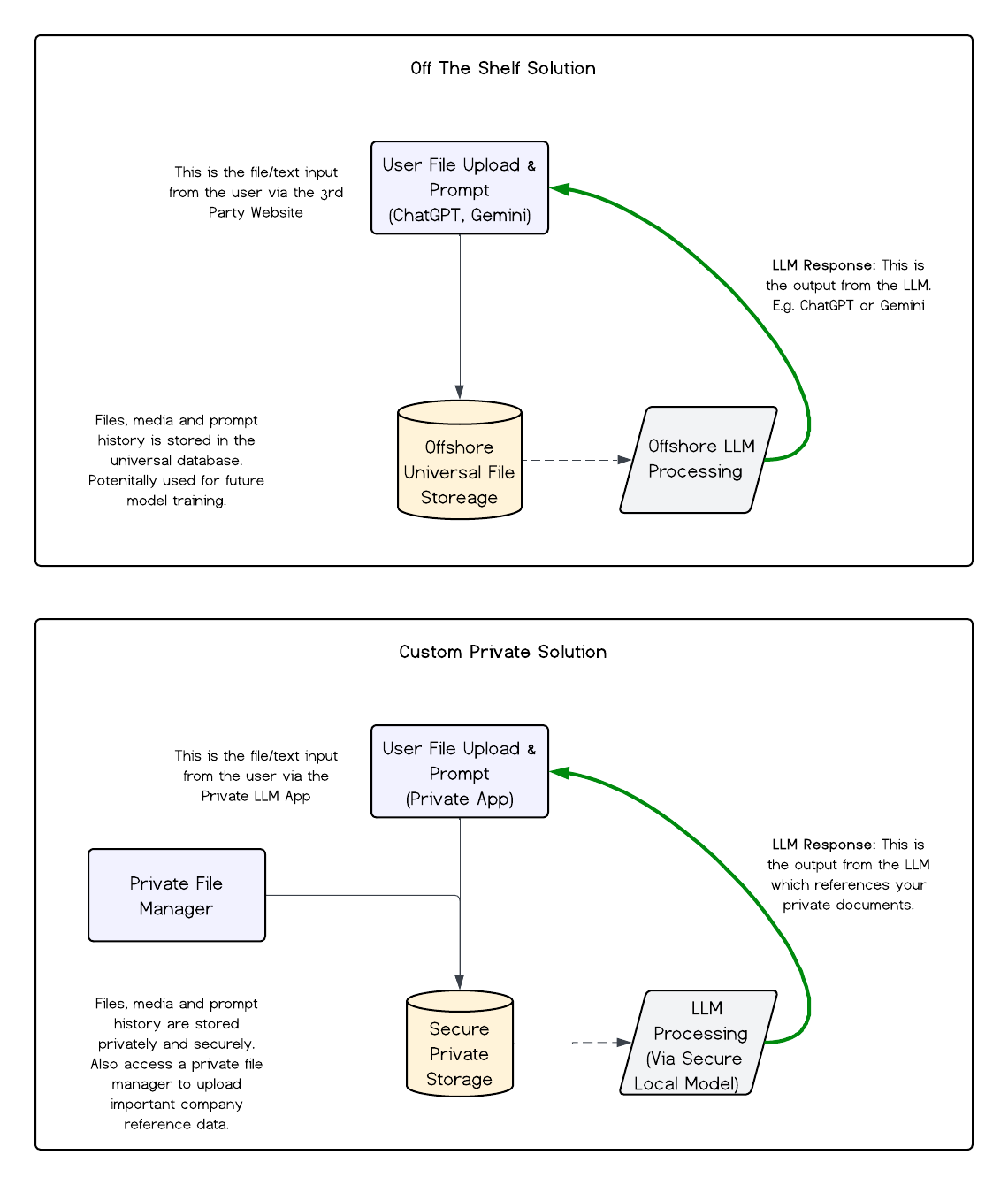

As much as third-party LLM providers love to talk about “security,” the moment you send your data to tools like ChatGPT or Gemini that information is heading into an offshore black box. You lose control of where it goes, who stores it, and how it’s used.

Before I dive into how you can solve that let’s quickly cover how LLMs actually work, and what’s happening to your data when you use these tools.

At a high level, a Large Language Model (LLM) is a prediction engine. Think of it like a supercomputer that rapidly scans your input, spots patterns, and guesses what should come next whether it’s writing text, reviewing code, or analysing images.

Say you upload a photo of a banana into ChatGPT and ask what it is. The LLM transforms that image into a series of numbers that represent features like colour, shape, and texture. Then it compares those patterns to millions it’s seen during training to confidently say: “That’s a banana.”

It’s not seeing the way humans do it’s pattern matching at scale. The same thing happens when you ask it to write an email, generate a contract, or analyse data.

So why does this matter? Because LLMs are trained on enormous datasets, and many providers (such as ChatGPT) use your inputs your files, your code, your IP to further train their models. That’s what the “P” in ChatGPT stands for: Pre-trained.

When you upload a document or send a prompt, there’s a high chance that your data is being stored, analysed, and potentially used to improve future versions of that model.

This isn’t a conspiracy theory, it’s documented practice. In fact, in 2023, Samsung made headlines when an employee uploaded proprietary source code and meeting notes into ChatGPT. That data was absorbed into OpenAI’s training pipeline. Samsung immediately banned all use of generative AI internally and built their own private AI system to protect their data. To this date, Samsung still promote the usage of their internal LLM over external systems.

There are countless similar stories across governments, military departments, and private businesses from SMEs to large corporates. And chances are, your team is either already uploading sensitive data into public LLMs on a daily basis, or avoiding AI tools altogether due to these very concerns.

Knowing all this, I wanted to share how we’re helping companies adopt AI with confidence without compromising security or breaching compliance laws.

Here’s the crux of it: Off-the-shelf AI tools store your inputs, including prompts, chats, documents, and files. These are usually housed offshore (typically in the US or EU) and can be used to train future versions of the LLM. For Australian businesses, this not only presents a significant privacy and IP risk, it can also breach obligations under the Australian Privacy Act.

But here’s the good news, you don’t have to avoid AI. You just need the right environment to use it.

Over the past 12 months, we’ve seen a growing number of companies including ourselves and our clients adopt a new approach to AI that enables meaningful automation while keeping data and IP fully protected.

Rather than using tools like ChatGPT or Gemini in their standard public environments, this setup creates a private AI workspace. It offers the same core capabilities of modern LLMs, but without exposing sensitive information to third-party platforms or offshore servers.

Here’s how it typically works:

• Internal documents such as client templates, process manuals, contracts, or training guides are uploaded into a private, encrypted database.

• When a team member submits a prompt, the system uses this internal data as context, paired with a standard LLM, to generate a relevant response.

• Crucially, the LLM itself is hosted locally, often using the same model architecture as ChatGPT or Gemini just deployed in a way that ensures data stays onshore, within the organisation’s own infrastructure.

No privacy risks. No external storage. No unwanted model training.

Every interaction from prompts to uploads is logged, auditable, and fully compliant. We’re finding that the end result offers the same speed, adaptability, and automation benefits of cutting-edge AI without compromising control or confidentiality.

We’re seeing that this approach isn’t just about plugging a security gap it’s a gateway to building genuinely powerful internal tools. We’ve used this setup ourselves, and we’ve seen our clients do the same, unlocking AI-driven workflows across their businesses.

Once this infrastructure is in place, it becomes the foundation for building your own internal AI systems and automation.

And the best part: It doesn’t take months to build. Some teams including our own have gone from idea to live system in just a few days.

So there you have it a snapshot of how AI-led Australian businesses are taking a more deliberate, safer path forward.

Rather than stepping back from AI due to privacy fears, more teams are leaning into private, onshore systems that offer confidence, control, and the freedom to innovate.

If you’re curious about how this might look in your own business, feel free to flick me an email at andrew@moonward.com.au. Always happy to share what’s working, what to watch out for, and where to start.